Healthcare has long been a paper-based industry, but digital transformation in healthcare is reshaping the landscape. After all, digital technologies and cloud computing are redefining the way healthcare is delivered, experienced, and managed. Additionally, digital transformation is improving operational efficiency and driving breakthroughs in medical innovation.

What is Digital Transformation in Healthcare?

Not only can digital transformation projects include digitizing patient records, but they can include a wide variety of measures that improve patient outcomes, speed up common processes, and upgrade the overall patient experience. We’ll share the many benefits digital transformation brings to healthcare, some common use cases, and what healthcare organizations should think about to meet the evolving needs of patients.

What are the Benefits of Digital Transformation in Healthcare?

Digital transformation is important in healthcare for a few major reasons – it can improve patient outcomes, allow for more efficient decision-making, spur innovation, reduce costs, and open the door to greater accessibility.

Better Patient Outcomes

Some health concerns are more urgent than others, and in those cases, every moment counts. Digital health records can connect patients to their information, as well as provide greater collaboration between clinicians. The increased transparency enabled through digital transformation can even make it easier for health professionals to diagnose and treat certain conditions more efficiently, leading to better patient outcomes.

Quicker Innovation and Informed Decision-Making

Real-time access to data and knowledge can both improve decision-making and allow for more informed decision-making. Providers can make time-sensitive, critical decisions more easily when they are presented with timely data.

This improved access can also speed up innovation time. A shorter feedback loop can give healthcare professionals the data they need to understand the programs and features that might be most helpful to staff and patients alike.

Improved Efficiency and Cost Savings

Digital transformation projects provide efficiency in multiple directions. Staff can streamline administrative processes, improve the productivity of their care, prevent medical errors, and cut down on unneeded procedures and tests. Efficiencies such as these can save money and greatly improve patient and caregiver experiences.

Enhanced Accessibility and Convenience

Patient expectations will continue to rise with technological advances. Most people navigating the healthcare system want it to be just as easy to access their electronic medical records and reach out to a provider as it would be to order food online. Digital technologies can enhance the accessibility and convenience of the healthcare experience through telemedicine, online access to information, self-service tools, helpful automated workflows, and more.

Top Drivers for Cloud Adoption in Healthcare

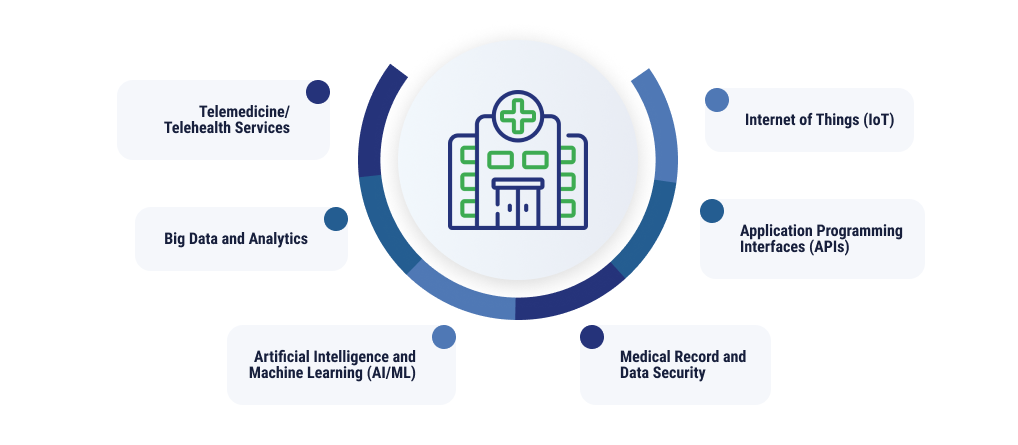

Growing patient expectations, paired with the rise in emerging technologies, such as artificial intelligence (AI) and Internet of Things (IoT) devices, are some of the major drivers of cloud adoption in healthcare.

Need for Telemedicine/Telehealth Services

The COVID-19 pandemic turned what was once an added benefit to many into a complete necessity. The use of telemedicine services didn’t just spike, but levels have also remained high in recent years. According to the AMA, 25% of people used telehealth services in 2022 compared with 5% pre-pandemic. Experts predict that these numbers will continue to rise.

Even before 2020, telemedicine and telehealth services have played an important role in access to care for patients who live in rural areas, as well as those who have difficulties when traveling to an in-person provider.

Telemedicine also delivers an added benefit for patients looking to fit an appointment into their busy schedules. Mobile health (mHealth) applications make access to services even easier, making something that may have previously required a desktop computer an on-the-go solution.

Growth in Big Data and Analytics

Even in a paper-heavy industry, hospitals manage tons of digital data, from supply inventories and insurance data to patient medical charts, research, doctor’s notes, and diagnostic images. According to the World Economic Forum, hospitals produce 50 petabytes of data per year – that’s equivalent to 10 billion music files! However, much of this goes unused.

We’re seeing this ever-increasing amount of healthcare-related data, both patient and operational. Data generated from diagnostic equipment, patient monitors, medical images, electronic health records (EHR), and IoT devices can help hospitals improve patient diagnosis and treatment outcomes. For example, wearable ECG monitors can remotely monitor patients and alert healthcare providers of changes that may be cause for concern. The less positive side to data growth is the cost and time involved in managing huge volumes of data. Cloud-based storage is a cost-effective solution for storing and backing up petabytes of data, and many cloud providers offer data management services. Likewise, cloud-based content management and EHR applications enable hospitals to store volumes of patient data without the expense of new hardware.

Advancements in Artificial Intelligence and Machine Learning

The more patient data is used to improve diagnosis and outcomes, the more the experience improves in general. Data analytics and AI-based diagnostic tools can help healthcare organizations get there. Doctors and scientists can uncover valuable insights and determine optimal treatment strategies for patients.

Experts predict that these technologies will come into play more with devices used in the early stages of patient care, such as glucose monitoring and neuromonitoring tools. About one-quarter of all medical devices currently use AI/ML technology, and that number will only grow.

Rise of Internet of Things

While remote patient monitoring is becoming more popular, one of the most widespread uses of IoT devices in health care has come from the ubiquitous nature of wearable devices. An estimated 492.1 million devices were shipped globally in 2022. These devices can provide valuable insight from long-term, regular data collection, and can add more context to annual wellness checkups.

IoT devices have also made their way into the healthcare space through implantable devices, environmental controls, smart beds, robot-assisted surgical devices, and more.

Flow of Data Between Platforms and APIs

The cloud provides a cost-effective platform for sharing data between healthcare providers. Hospitals, clinics, pharmacies, and insurers can share data and files over a common cloud platform using healthcare interoperability standards and Application Programming Interfaces (APIs) – a capability that isn’t possible with legacy EHR systems. Cloud-based electronic records applications and healthcare exchanges also enable data sharing between disparate cloud healthcare applications.

In addition, many leading cloud platforms–including Azure Health Data Services and the Google Cloud—have APIs based on healthcare open standards for data exchange. These include the Digital Imaging and Communications in Medicine (DICOM) for diagnostic images and the Fast Healthcare Interoperability Resources (FHIR) for describing data formats and an API for exchanging electronic health records.

Securing Medical Records and Vital Patient Data

One of the main reasons healthcare leaders have been tentative about moving to the public cloud is due to security concerns, but trust has slowly been growing, with about one-third of healthcare organizations utilizing public cloud in 2022. Managed public cloud providers today are also secured by multiple, advanced technologies including encryption, identity, and access control, intrusion prevention and detection, real-time security intelligence feeds, and threat management. A good cloud security services provider will have experience in both the healthcare industry and cloud security, and certification in government regulations and industry best practices.

Healthcare Cloud Adoption Use Cases

In today’s digital landscape, healthcare organizations don’t have to decide between digital transformation, security, and compliance concerns. The cloud is well-equipped to meet the needs of the industry with multiple use cases:

- Dental Associates worked with TierPoint to maintain a hybrid cloud solution that assured patient data and HIPAA-related information remained secure

- Clario Medical supported their rapid growth with TierPoint customized private cloud (IaaS) and managed services solutions that were designed with security and compliance in mind

- RMS transformed its patchwork of IT infrastructure to a private cloud that enabled rapid scalability and increased reliability. With TierPoint’s hybrid cloud/private cloud DRaaS services, they were able to improve their business continuity and disaster recovery measures, while increasing and maintaining full production capacity

How to Approach Digital Transformation as a Healthcare Provider

Digital transformation and increased adoption of public cloud services have increased in every industry, but healthcare as an industry is still behind the curve. In fact, the adoption rate for cloud services in healthcare is 50% of that in other industries. This is due, in part, to healthcare organizations feeling a distrust for the cloud.

Modern healthcare providers that want to embrace digital transformation must be mindful of this statistic and approach digital transformation projects with strategy, change management, and training at the forefront.

The Role of Leadership

Because healthcare is an industry that’s trailing behind in terms of digital transformation, a leading voice is key. Effective leaders in healthcare digital transformation need to have a clear vision of the goals and objectives their organization wants to reach, and how these digital projects will get them there.

By encouraging a culture of innovation, staying open-minded to new technologies, and empowering teams to explore modern digital transformation initiatives, leaders can set the tone from the top that organizations don’t have to keep doing things the way they’ve always done them.

Reiterate to team members that this isn’t a fly-by-night idea by providing regular updates on digital transformation efforts. Allocate and share resources to complete projects, and keep all relevant team members informed about successes, setbacks, and progress along the way.

Creating a Strategy

After you’ve delivered a clear message on overall goals, create and deliver a well-defined strategy that addresses:

- The current technology infrastructure of the organization. What processes, infrastructure, and data management practices are currently being used?

- The end goals of digital transformation. What does “success” look like when it comes to these efforts? Reducing wait times to hear from doctors, improving patient outcomes, etc.

- The first steps the organizations will take to reach these goals through digital transformation.

- The implementation plan for the first steps. Who needs to be involved, how long will it take, what resources need to be gathered to pull it off, and how does the end of the process tie to the identified success metrics?

Encouraging and Managing Change

Even with the right strategy and appropriate tools, managing change can be one of the hardest parts of digital transformation. Oftentimes, these projects require major changes to technology, workflows, and culture. Healthcare providers who are leading the charge on this type of modernization need to get buy-in from all levels of the organization in all relevant departments.

Investing in Internal Training and Development

Clinicians, patients, and administrative staff all need to be brought up to speed on what the changes will mean for their everyday tasks and interactions. Some new tools will undoubtedly require training and support to spur adoption and encourage retention.

During training and in times of change, leaders should not dismiss concerns. Instead, they should open avenues for team members to voice their worries about changes to workload or the impact that new technology can have on patient care.

Sometimes, these concerns may point to gaps in internal talent. Tech industry executives are finding it hard to hire technology talent, especially senior technical roles such as cybersecurity specialists and system architects. About 60% of tech industry leaders say that recruiting talent is a major challenge for their company, and approximately 80% say that fostering innovation is a moderate or major challenge as well. Healthcare organizations struggling to fill their internal cloud skills gaps can benefit from third-party providers with extensive experience in planning and executing digital transformation initiatives.

Selecting a Cloud Provider for Your Journey to the Cloud

Cloud providers offer a range of services for data interoperability, data security, cloud migration, and cloud connectivity options to promote innovation and help healthcare companies become more competitive.

Set your journey to the cloud off on the right foot with professional guidance on the right cloud provider for your needs. Whether you’re looking to make the leap to public cloud, or you want to house your current systems with a colocation provider, TierPoint can help with our digital solutions for healthcare businesses.

Is your team prepared to navigate your digital transformation? Download our eBook to discover the technical skills and resources you should have on-hand to ensure a successful transition to the cloud.

FAQs

The cloud is used in healthcare in many different ways – medical imaging, telehealth, electronic health records, streamlining administrative tasks, diagnostic tools through AI/ML, and more.

The adoption rate for cloud computing in healthcare is lower compared to other industries – 47% of organizational sensitive data is stored in the cloud, compared to 61% in other industries.

Some of the biggest challenges that stand to impede digital transformation in healthcare include compliance and regulatory standards, interoperability between platforms and data, and data security and privacy concerns.